The Shocking Truth About RF Implantable Devices

By Jim Pomager, Executive Editor

Implantable wireless devices inherently carry real cybersecurity vulnerabilities, and designers must make mitigating them a priority.

Fans of the popular TV show Homeland will recall the alarming “Broken Hearts” episode near the end of last season. In it, al-Qaeda commander Abu Nazir kidnaps CIA agent Carrie Mathison and threatens to kill her unless Congressman/terrorist/Marine Nicholas Brody obtains the serial number for the U.S. Vice President’s pacemaker and delivers it to Nazir. Brody complies, sneaking into the Vice President’s office and sending the code to Nazir. The Vice President returns to find Brody in his office, but it’s too late — the terrorists have remotely hacked into the pacemaker and caused it to speed the Vice President’s heart rate. As Brody looks on impassively, the Vice President has a heart attack and dies.

How plausible was this scenario? Quite, claimed Barnaby Jack, a celebrated hacker and director of embedded security research at IOActive, in a blog post after the show aired. Jack explained how many elements of the gruesome scene were completely valid (if slightly exaggerated).

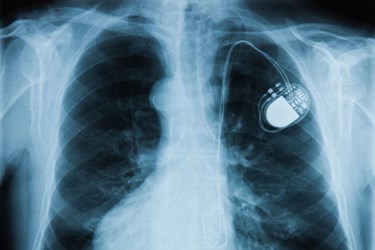

As those of you who design them already know, pacemakers and implantable cardioverter defibrillators (ICDs) monitor for irregularities in heart rate, also known as arrhythmias, and deliver low-voltage electrical pulses to gently nudge a misfiring heart back into its proper rhythm. ICDs take matters a step further, by administering high-voltage pulses to treat life-threatening arrhythmias, when necessary.

Pacemakers and ICDs have, for years, utilized radio frequency (RF) technology to transmit patient and device performance data wirelessly to a remote monitor/bedside transmitter (which in turn can send the information via the Internet to a physician). These radios operate in the MICS (Medical Implant Communication Service) frequency band — between 402 and 405 MHz — and pair with the monitor using the pacemaker’s serial number and/or device model number. The implantable device and monitor can communicate with each other over a range of up to 50 feet, a distance that could potentially be increased by using a high-gain antenna, Jack suggested.

Once the devices are paired and you understand the communication protocol, Jack said, “you essentially have full control over the device.” It’s not inconceivable that, over this wireless connection, a hacker could read and write memory, rewrite firmware, modify its parameters, and yes, even deliver a high-voltage electric shock (in the case of an ICD). “In the future, a scenario like [the Homeland episode] could certainly become a reality,” Jack concluded.

Researchers have replicated the feat with a Medtronic Maximo ICD, albeit in a lab (not a person) and over a distance of inches (not 50+ feet). The investigators — from the University of Washington, the University of Massachusetts, and Beth Israel Deaconess Medical Center — used the device’s radio to hack into the device, reprogram it, and shut it down. They published their seminal findings in the paper “Pacemakers and Implantable Cardiac Defibrillators: Software Radio Attacks and Zero-Power Defenses”.

The problem isn’t limited to pacemakers. At the McAfee FOCUS 11 conference in October 2011, Jack accessed an insulin pump (without prior knowledge of its serial number) through its radio link and caused it to deliver what would have been a fatal dose. And in February 2012, during the RSA Security Conference, he showed he could wirelessly hijack an insulin pump, this one implanted in a transparent mannequin, at a distance of up to 300 feet. Fellow hacker Jay Radcliffe, a senior security analyst for InGuardians and a diabetic, wirelessly hacked his own insulin pump (again from Medtronic) at the 2011 Black Hat USA computer security conference — demonstrating how a $20 RF transmitter purchased on eBay could be used to force the pump to dispense random doses or disable it altogether.

Understandably, the relationships between the hackers, like Radcliffe and Jack, and the device makers whose product flaws they expose have been, well, strained. The good news is that there are signs that manufacturers are starting to heed hackers’ advice on implantable RF device security, and medical cybersecurity in general. In an interview with the Association for the Advancement of Medical Instrumentation (AAMI), Radcliffe singled out Medtronic (previously the object of his ire) for making legitimate progress in addressing cybersecurity in its devices, for instance by creating a C-suite executive position devoted to device privacy and security, and by working directly with the Department of Homeland Security. “They have been taking substantial steps to make this a big priority in their products and company culture,” he said.

The FDA has also stepped up its efforts to keep the industry focused on cybersecurity. In June, the agency issued a warning to medical device manufacturers and healthcare facilities, stating that the agency “has become aware of cybersecurity vulnerabilities and incidents that could directly impact medical devices or hospital network operations.” One of the warning’s specific concerns was malware on hospital computers, smartphones, and tablets that targets mobile devices to access patient data, monitoring systems, and implanted patient devices. The warning politely reminded device makers of their responsibility to put “appropriate mitigations in place to address patient safety and assure proper device performance,” including limiting opportunities for unauthorized access to medical devices. The FDA also issued draft guidance for manufacturers on managing cybersecurity, and announced plans to establish a cybersecurity laboratory for testing, among other things, devices that use Bluetooth or Wi-Fi to connect to computers.

But there is still much work to be done, and so the security advocates continue to apply pressure. At Black Hat 2013 earlier this month, IOActive’s Jack was scheduled to give a talk called “Implantable Medical Devices: Hacking Humans”, focused on the security of wireless implantable devices. Tragically, the 35-year-old died the week before the conference, so he was unable to discuss his latest findings — how a common bedside transmitter can be used to scan for, identify, and interrogate individual medical implants — and share ideas for how manufacturers can improve device security. (At the time of this writing, the cause of his death was still unknown.)

While the experts concur that a malicious Homeland-like attack on RF-based implants is unlikely, and some manufacturers have made great strides in protecting their products from wireless breaches, implantable device security is still a matter of utmost concern. After all, there are over 3 million pacemakers and close to 2 million ICDs in use today, and some half a million more are implanted each year. Plus, not all threats need be deliberate. Imagine the potential impact of simple malware on pacemaker performance, should it inadvertently infect the device through its wireless connection.

What should you do to close potential cybersecurity vulnerabilities in your wireless implantable design? For one, make device security an integral, integrated component of your overall development process. Also, put a higher premium on device security testing — most importantly, penetration testing to identify weaknesses in wireless defenses — and issue remediation. Ignore cybersecurity and it will invariably come back to haunt you, whether it’s in the form of a lawsuit, a letter from the FDA, or the embarrassment (and bad press) of a hacker exposing your device’s flaws on an international stage.